Data Analytics¶

In EnOS 2.1 Release, we added new functions and features to the Data Integration and Data Exploration services, and also added the ML Model Hosting service. Details are as follows:

Data Integration¶

In EnOS 2.1 Release, we added support to the PostgreSQL database. You can create data integration tasks for synchronizing data from EnOS Hive to an external PostgreSQL database for data analysis. For more information, see Synchronizing Data From EnOS Hive to Target Datastore.

Data Exploration¶

Jupyter Notebook¶

In addition to the Zeppelin Notebook, we added support of the Jupyter Notebook data exploring tool, which introduces Python2.x, Python3.x, and R kernels. Jupyter Notebook is an interactive data exploration tool, enabling data engineers and scientists to perform data processing and visualization in the same environment, create shared code and documents, simplify their workflow, and achieve higher productivity and better collaboration.

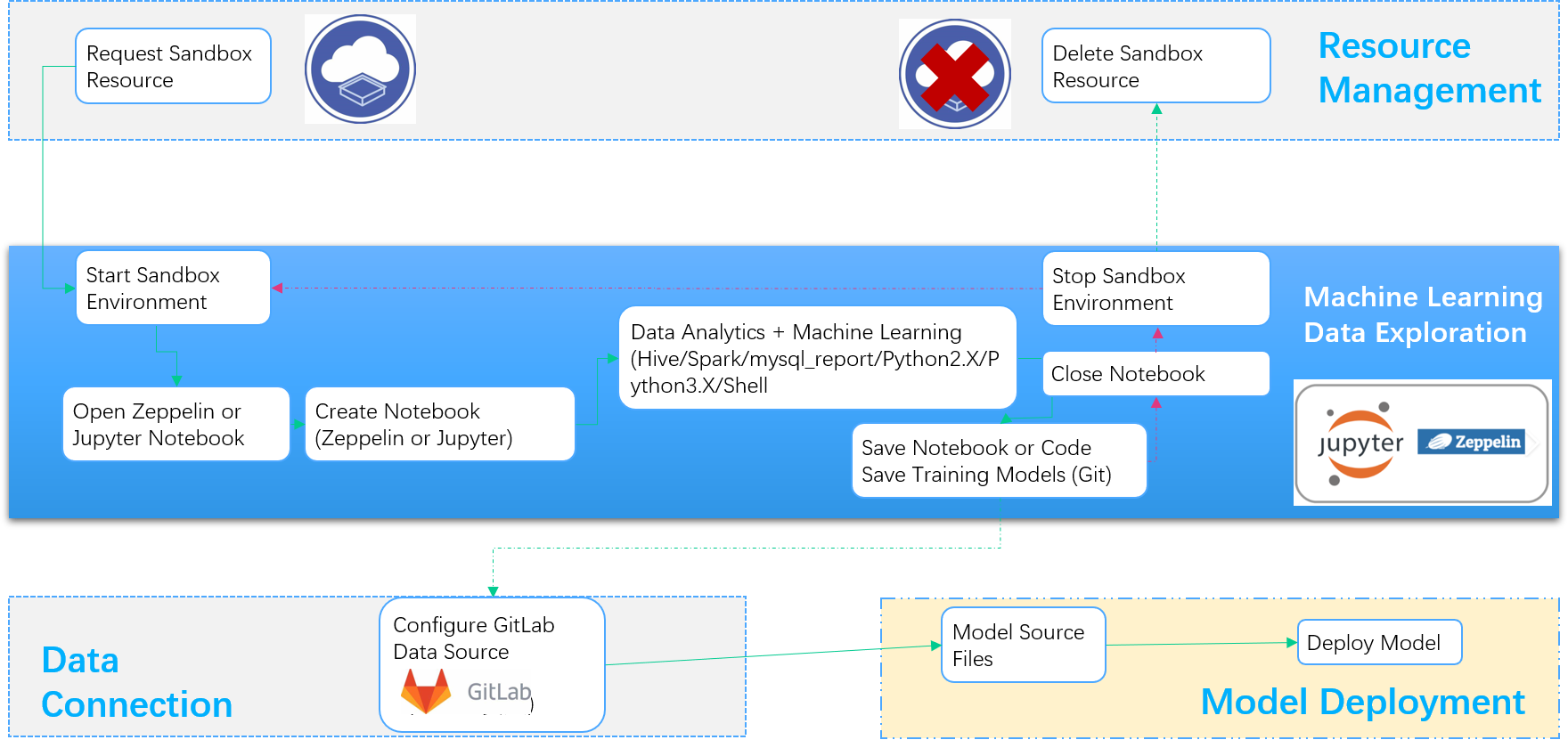

The workflow of data exploration is as follows:

The general processing of creating and using the Jupyter Notebook is as follows:

For more information, see Using Jupyter Notebook.

Queue Resource Configuration¶

By default, the Hive and Spark interpreters in Zeppelin Notebook use the default queue resource for data query and processing, which might lead to uncontrollable job running and unmanageable data processing resource. If you need to run data query and processing jobs with high resource consumption, you need to request Batch Data Processing resource and configure the interpreter with the requested queue name.

For more information, see Supported Interpreters.

ML Model Hosting¶

We added support of the ML Model Hosting service for quick deployment of “predictive” machine learning algorithm models, which is featured by:

- Algorithm model source files are stored and managed in GitLab projects, achieving version control of source files.

- Algorithm models can be deployed in multiple running environments, including Python 2.7, Python 3.6, and R 3.6.

- When a model is deployed successfully, the service will be automatically initialized and hosted on EnOS APIM.

- When a model is deployed, you can debug the service quickly by calling the service within the product interface.

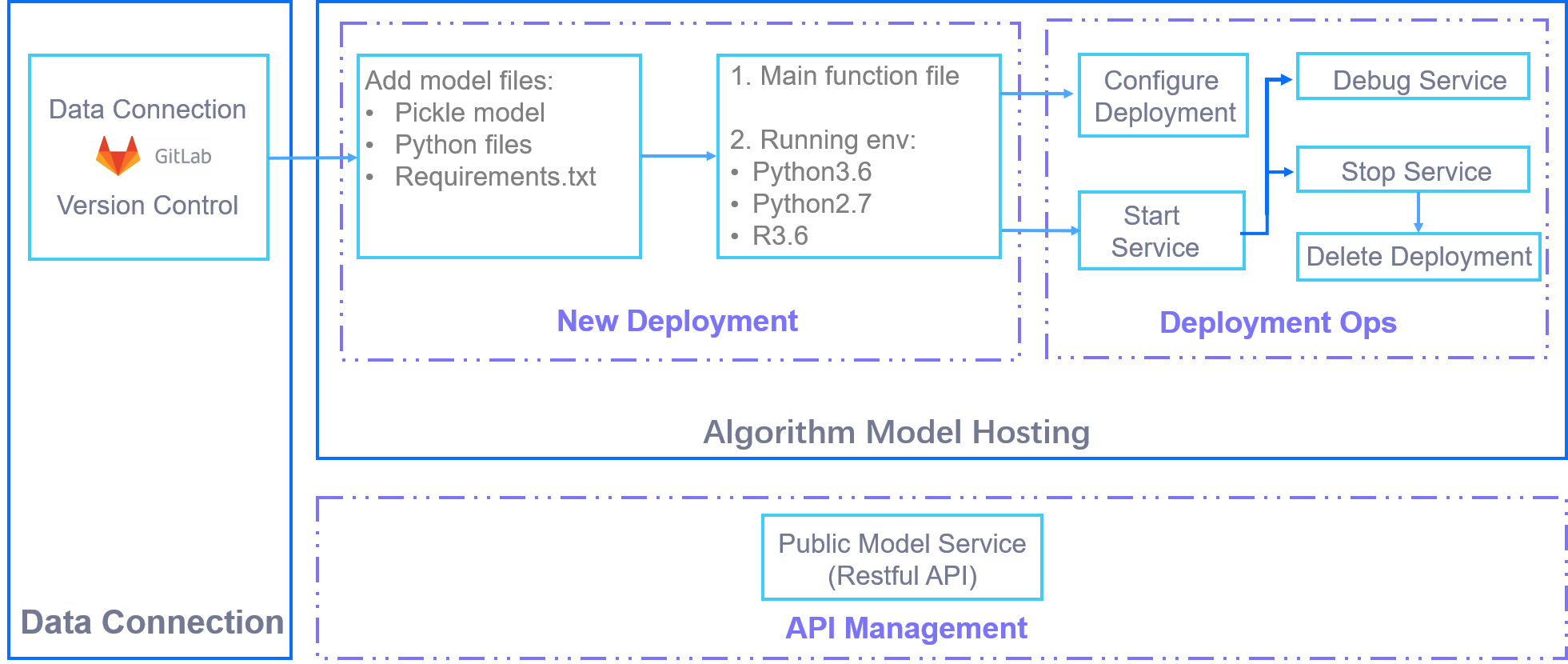

The workflow of hosting algorithm models is shown as follows:

The following figure shows the steps of deploying an algorithm model:

The following figure shows the steps of debugging the service that is hosted after the algorithm model is deployed:

For more information, see ML Model Hosting.