Stage a Model Version¶

After completing the model registration, you can stage the first version of the model. You can build the model developed in the AI Lab or the model developed on the local machine into a model image file that can be used for hosting.

You can also stage a version under the system provided sample models.

Stage the First Model Version¶

You can stage the first version of a custom model by following these steps:

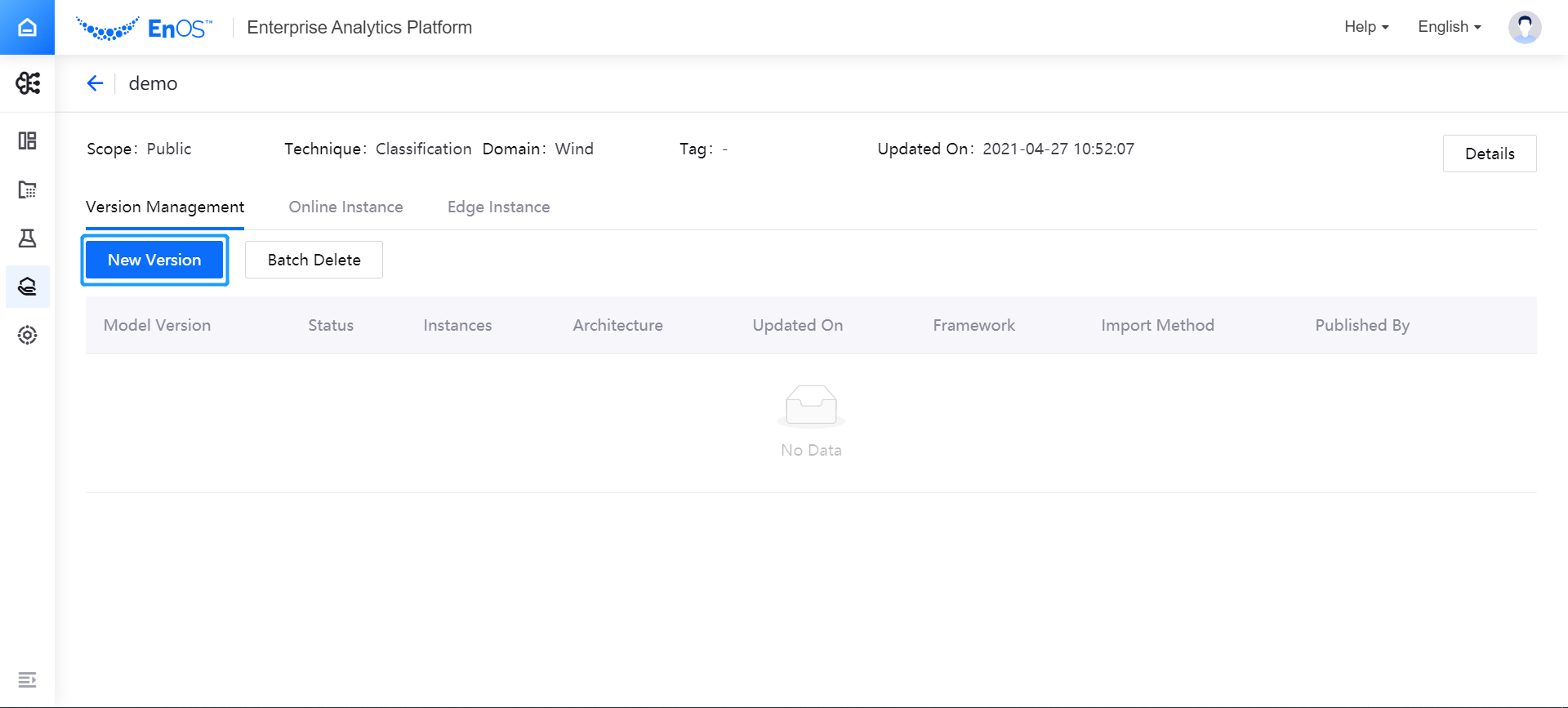

Open the custom model list page, search and select the registered model to enter the model detail page.

Under the Version Management tab, select New Version, and then follow the instructions to complete the version configuration, the build log, the parameter setting, and the testing of staged version in sequence.

Configure Version Information¶

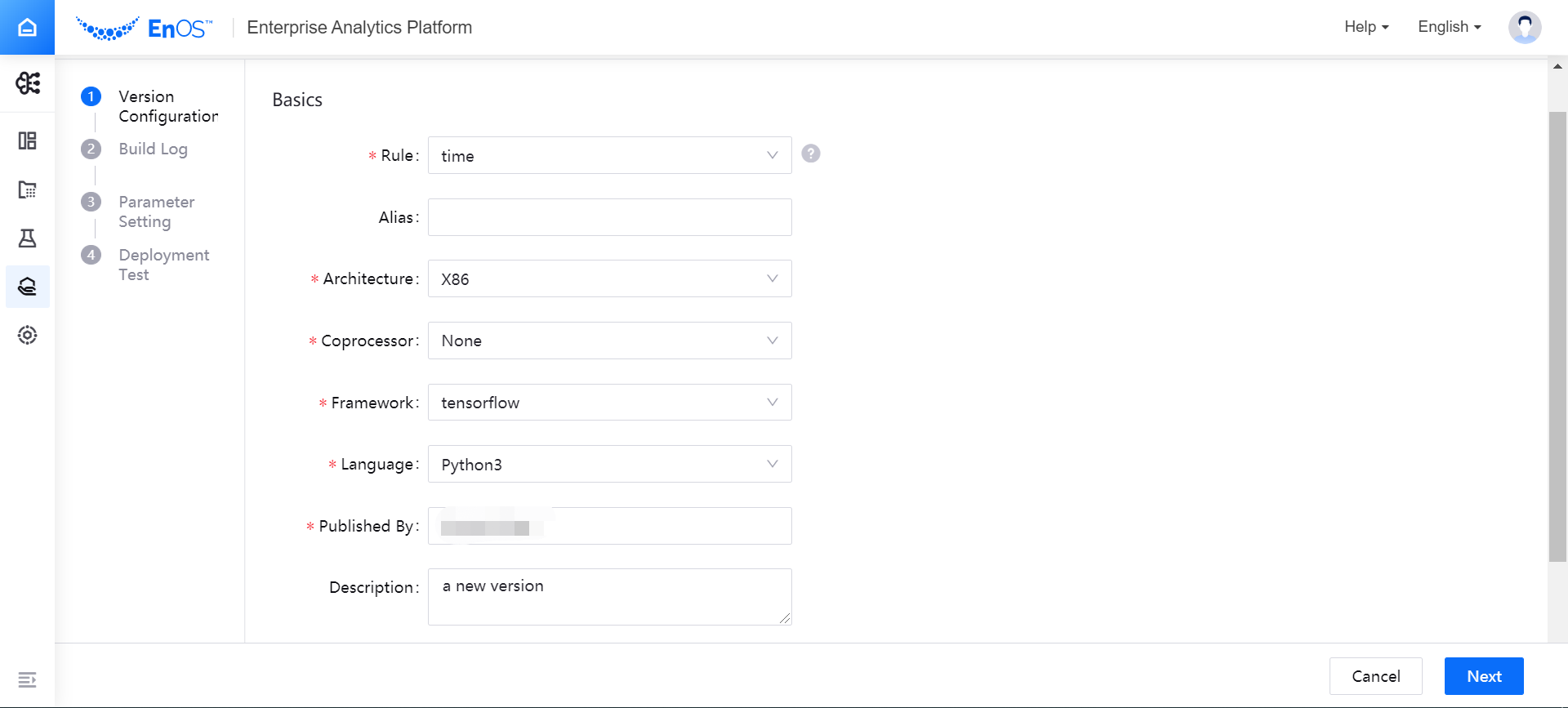

In the Basics section of the version configuration page, complete the basic information configuration of the version:

Rule: select the naming rule of the version, which currently supports naming the version according to the system timestamp when the version is published

Alias: Enter the alias of the model version

Architecture: select the basic hardware to run the model version

Coprocessor: select the coprocessor for running the model version. The computing frameworks supported by various coprocessors are given as follows:

GPU: supports tensorflow, pytorch, spark, etc. (Note: As the current IaaS does not support GPU, the model version does not support GPU capabilities currently)

TPU: supports tensorflow and pytorch

VPU: supports tensorflow and pytorch

None: default option, for which the computing architecture will keep the current logic

Framework: select the computing framework to run the model version

Language: select the language to be used for developing the model version (matched with the selected computing framework)

Published By: enter the name of the user who published the model version, where the current EnOS user name is used by default

Description: enter a brief description of the model version

In the Model Deployment section of the version configuration page, select the method to build the model, and complete the corresponding configuration for model file uploading. For different input data type (Text or Image), the model deployment methods are different.

If you choose MLflow or Seldon import method, define where and how to save the model version in the Advanced Configurations section.

Use the OU-embedded image repository: select Yes to upload the image to the OU-embedded image repository.

Save as model files: model files can be used in the AI Analytics Suite directly.

For Text Input Data Type¶

For text input data type (Text or Tabular), complete the model deployment by the following methods:

If you choose the MLflow import method, you can select the file to build the model version by following these steps:

In the Full Path field, select Import files. The Artifacts file generated by the AI Lab training task will be displayed in the pop-up window. For more information about viewing model building files through MLflow, see Monitor Notebook Running Instances.

After browsing and selecting the directory where the model file to be built is located (where the directory hierarchy is Project Name > Run Name > Instance Name, select Confirm.

The selected model building file directory will be displayed in the Full Path field.

If you choose the Container image file import method, the system will automatically specify the directory where the folder is located, and you can select the target files needed to build the model from multiple hierarchical directories of Artifacts source and Git source. When deploying the model, the system will deploy the specified image as a service.

Select Artifacts Source or Git Source to select the image file for model deployment (you can select files alternatively on the two sources; Git Source supports getting files from git branch or tag).

In the list of selected files, view the file details and remove unnecessary files

In the Entry point dropdown menu, select

Dockerfile.

(Optional) If you need to manage the target files in the target folder when importing image files from the Artifacts source, you can select Manage Target Folder on the right side of the page and drag the target file to the specified folder in the pop-up window for managing the target folder.

If you choose the Seldon import method, you can use s2i build to create a Docker image from the source code. The system will automatically specify the directory where the folder is located, and you can select the target files needed to build the model from multiple hierarchical directories of Artifacts source and Git source. When deploying the model, the system will deploy the specified image as a service.

Select Artifacts Source or Git Source to select the image file for model deployment (you can select files alternatively on the two sources; Git Source supports getting files from git branch or tag).

In the list of selected files, view the file details and remove unnecessary files.

In the Entry point dropdown menu, select the entry file.

If you choose Customized import method, you can package the model into a Docker image file on the third-party custom system, and then import it.

The system currently supports online access as the file loading method. In the URL input box, enter the address to obtain the model file.

For Image Input Data Type¶

For image input data type (Image), complete the model deployment by the following methods:

If you choose the Triton Server import method, you can select the file to build the model version by following these steps:

In the Full Path field, select Import files. The Artifacts file generated by the AI Lab training task will be displayed in the pop-up window. For more information about viewing model building files through MLflow, see Monitor Notebook Running Instances.

After browsing and selecting the directory where the model file to be built is located (where the directory hierarchy is Project Name > Run Name > Instance Name, select Confirm.

The selected model building file directory will be displayed in the Full Path field.

If you choose Customized import method, you can package the model into a Docker image file on the third-party custom system, and then import it.

The system currently supports online access as the file loading method. In the URL input box, enter the address to obtain the model file.

After completing the configuration for model file uploading, select Next, and the system will automatically start the model building.

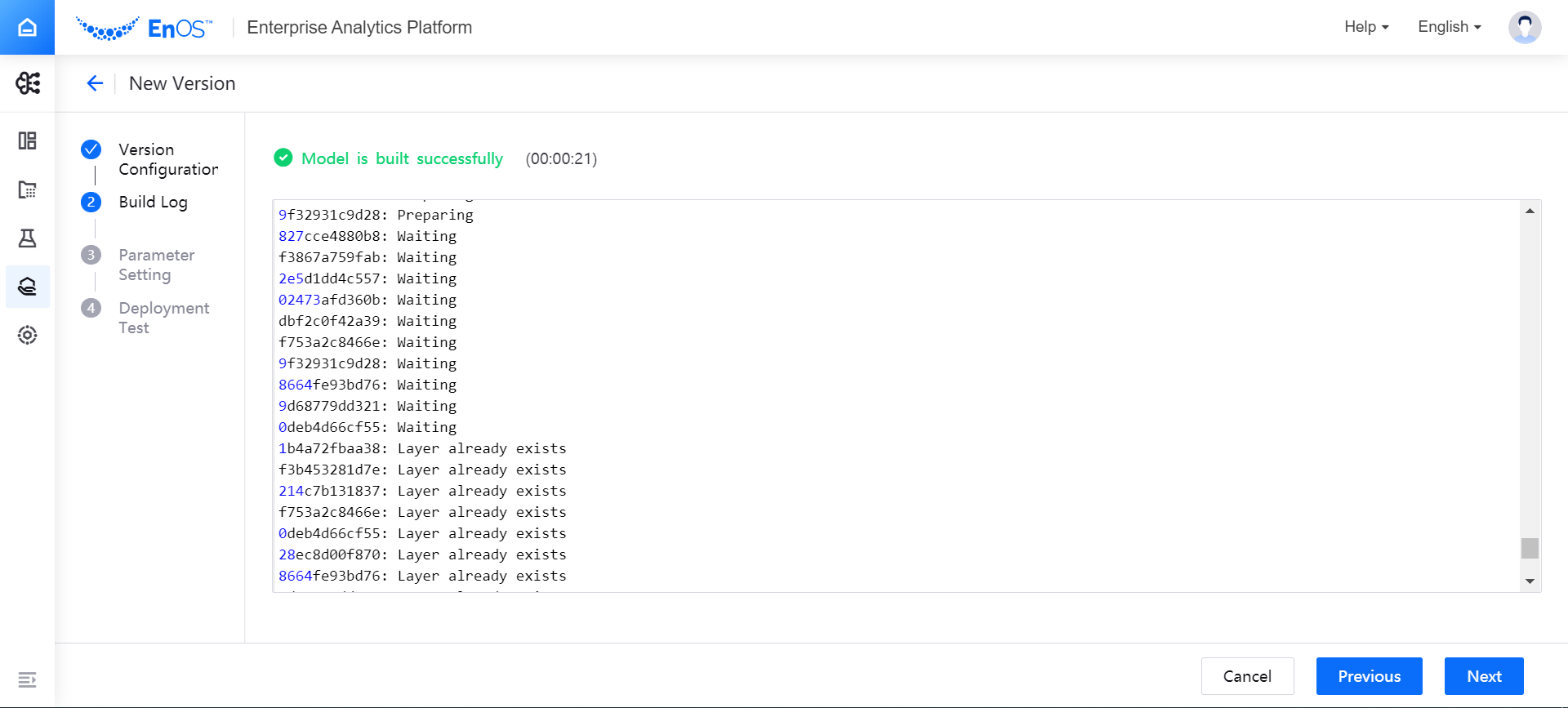

Build the Model¶

On the Build Log page, view the model building process. The building log will display the model building process in real time and record the time spent on building.

If the model fails to build, you can view the log to locate the cause of the failure, and select Previous to modify the configuration.

After the model is successfully built, select Next to complete the parameter setting.

(Optional) Set Model Parameters¶

Through parameter settings, you can configure the customized parameters of the model.

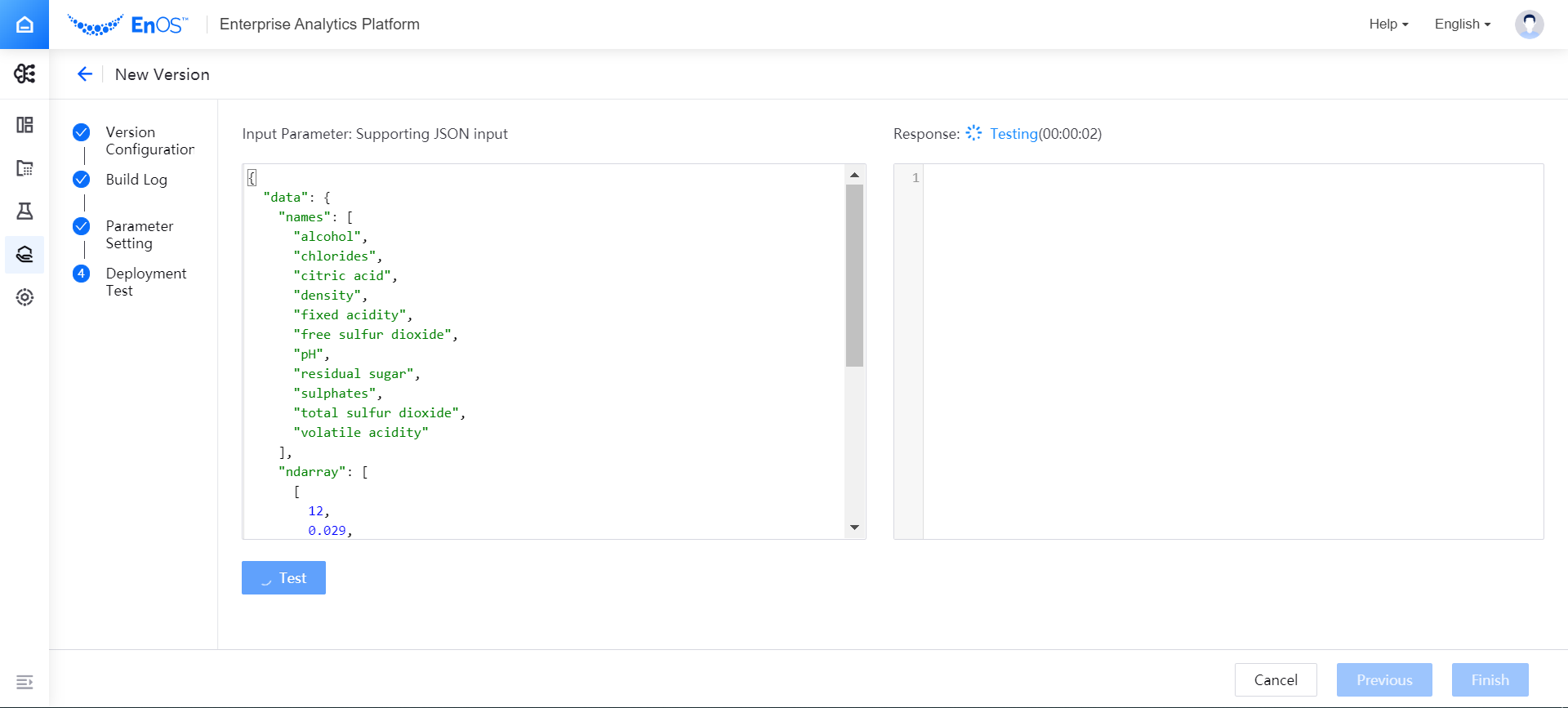

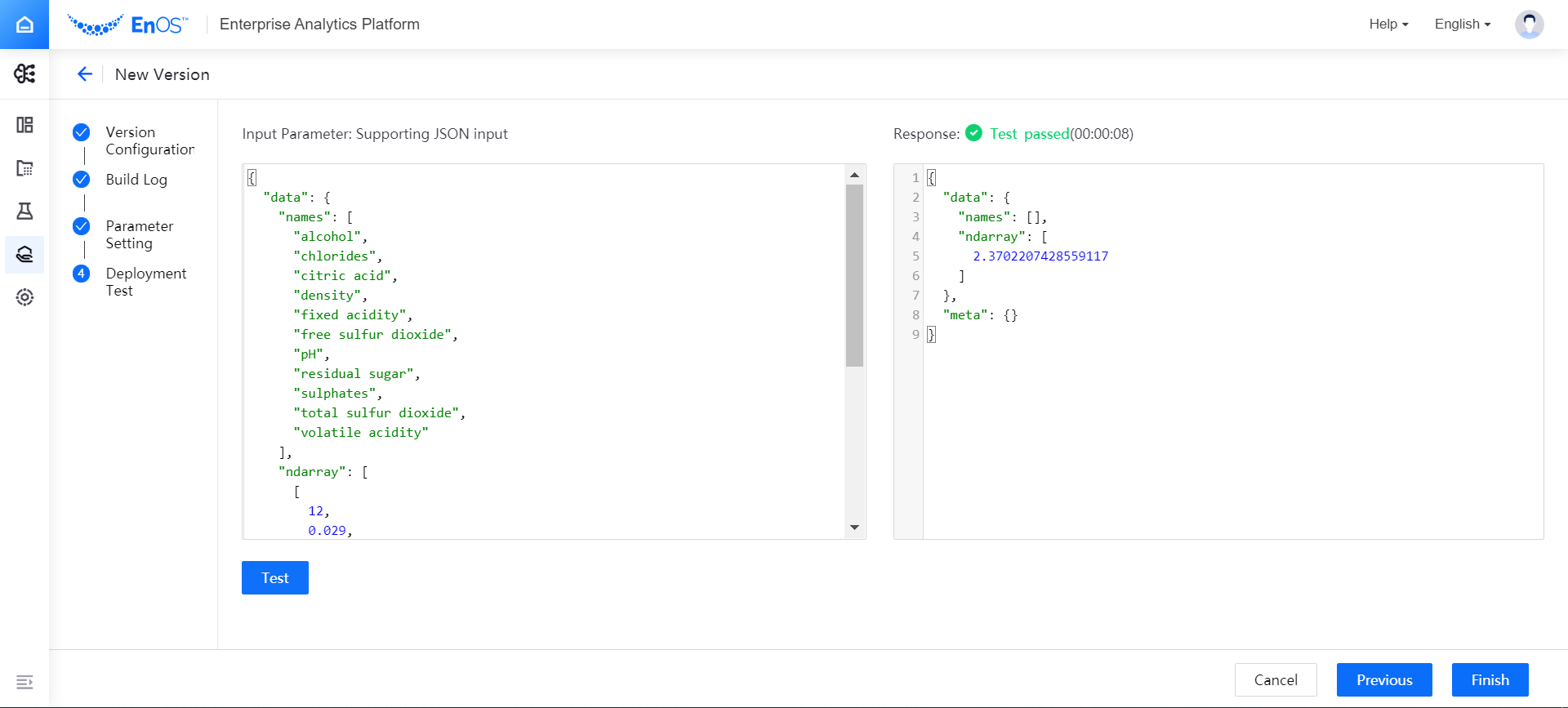

Test the Staged Model Version¶

According to the data input and output parameters set when creating a new model, write a test script or upload an image to test whether the model version can work normally. For different input data type (Text or Image), the model testing steps are different.

For Text Input Data Type¶

For text input data type (Text or Tabular), take the following steps to test the model version:

In the Input Parameter box, write a test script and select Test. Note: The size of the test JSON file automatically generated for feature parameters cannot exceed 32KB.

In the Response box, view the test results of the model version.

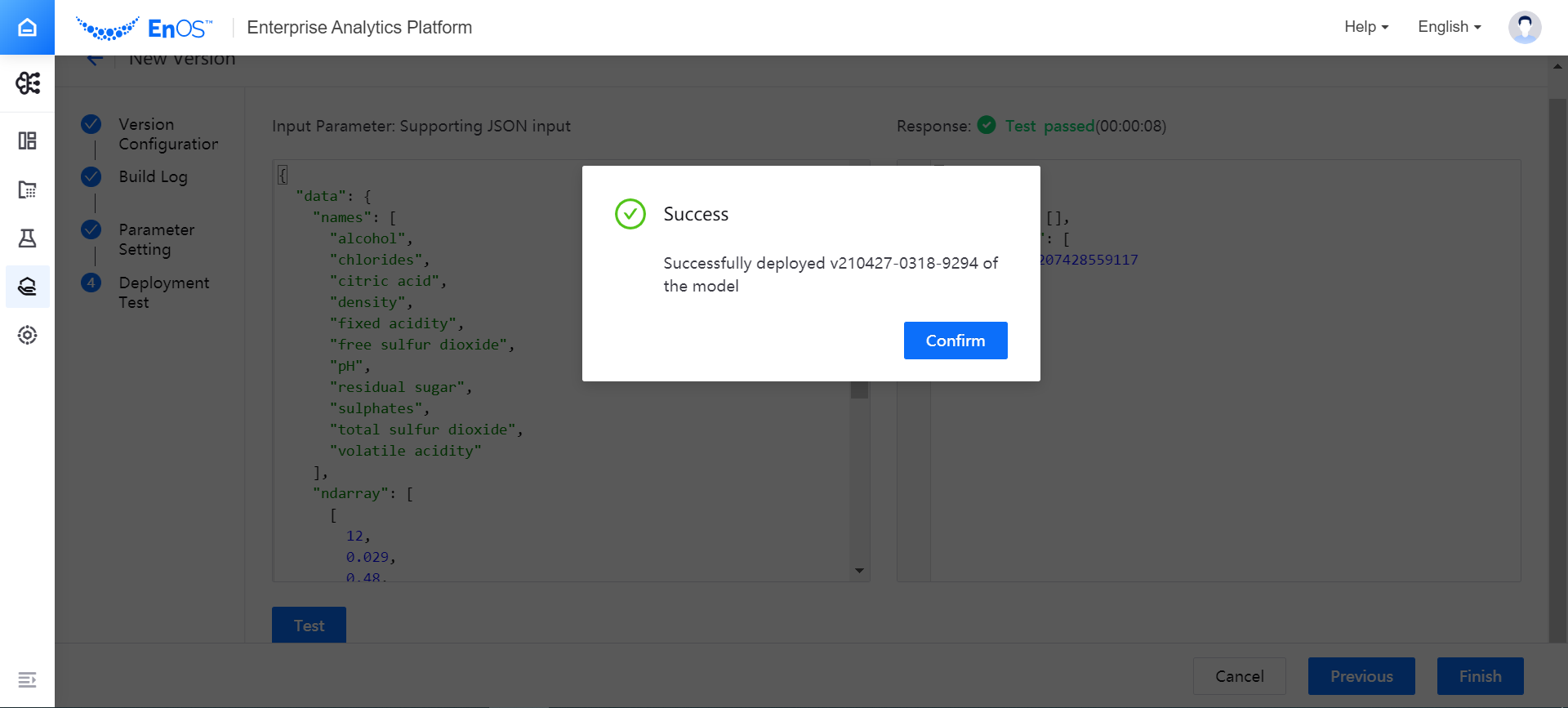

After the model version is qualified in the test, select Finish to stage the model version (If the version does not need to be tested, or the test failed, you can also choose to skip the test and stage the model version directly).

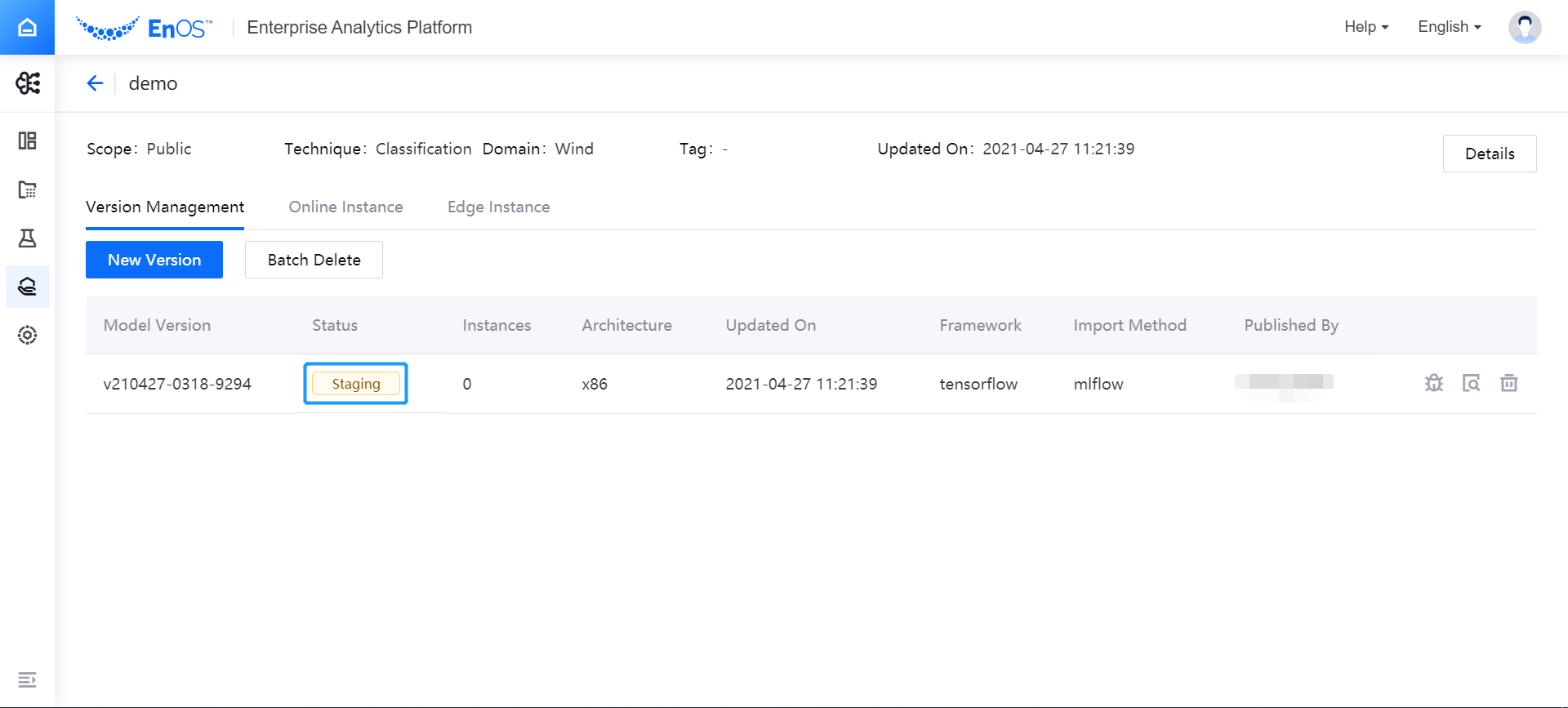

The staged model version will be displayed under the Version Management tab on the model details page. At this time, the status of the model version is Staging, and you can deploy the model version and run it online after checking it.

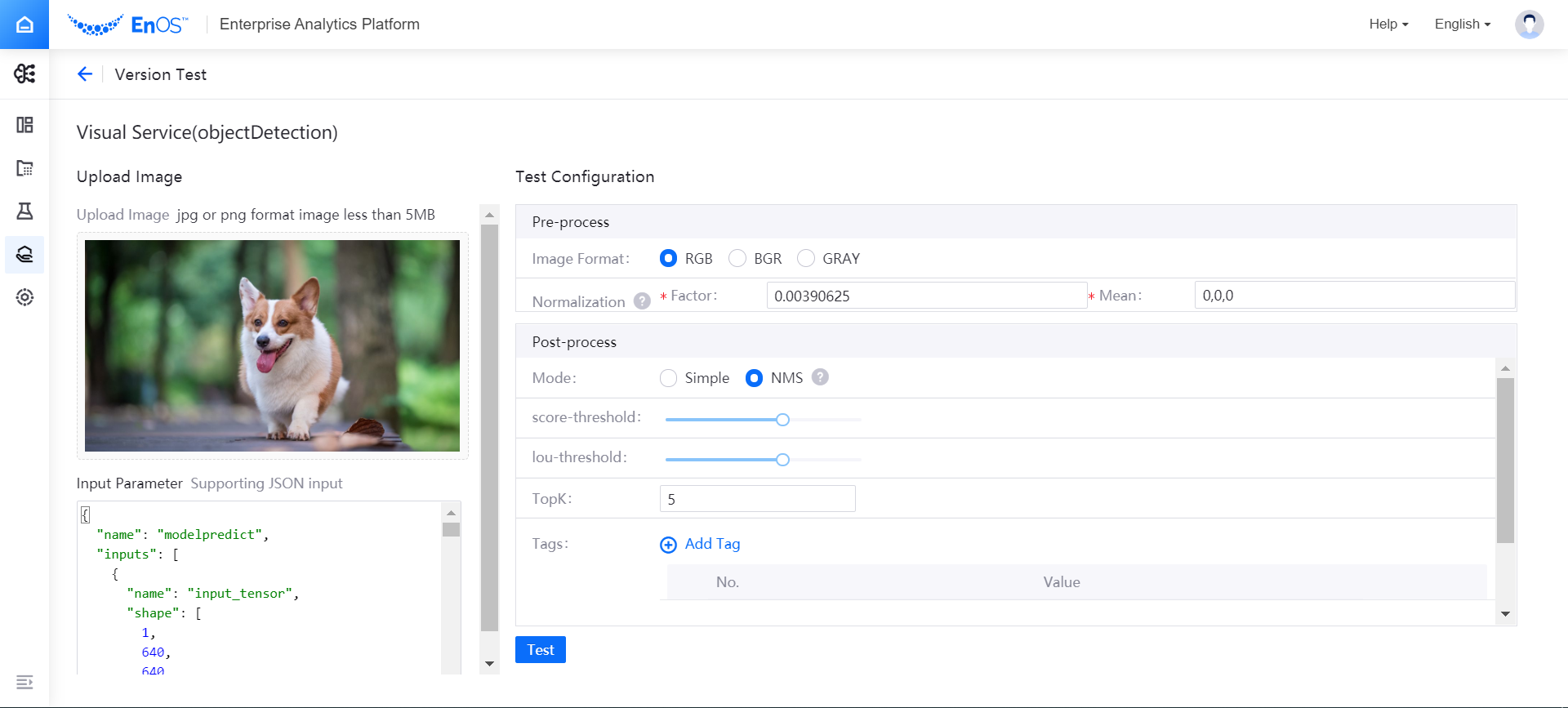

For Image Input Data Type¶

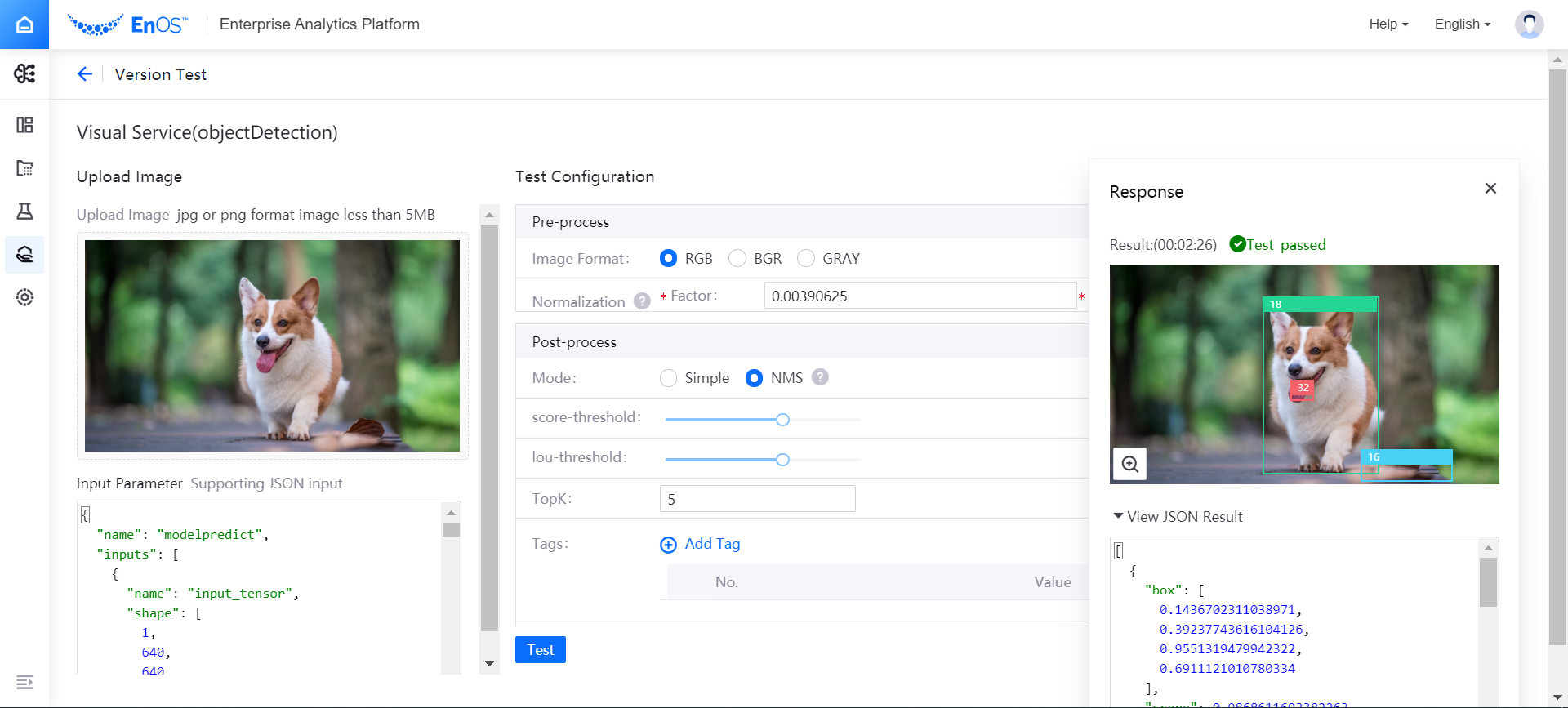

For image input data type (Image), take the following steps to test the model version:

In the Upload Image box, select and upload an image (jpg or png format) for testing. The size of the image must not exceed 5MB.

In the Input Parameter box, the test JSON file will be automatically generated. You can update the JSON file as needed.

In the Test Configuration section, complete the pre-process and post-process configuration to the testing image.

Select Test, and the system will start testing the model version based on the configuration. You can check the testing result and JSON result in the Response section.

After the model version is qualified in the test, select Finish to stage the model version (If the version does not need to be tested, or the test failed, you can also choose to skip the test and stage the model version directly).

Exporting the Model Version Image¶

After the model version is staged, the AI Studio administrator or AI Studio project operation can export the model version image for the deployment of model services on devices or in private environment where AI Studio is not installed.

Note

Because size of the image files is usually large, the Version Management page of AI Hub no longer provides the image file downloading function directly. You can download model version images using docker commands in a Notebook instance of AI Lab. For detailed steps, see Download Model Version Image>>.

Stage a New Version Based on an Existing Version¶

To quickly stage a similar version, select the Copy icon ![]() of an existing model version, you can update the configurations as needed based on the existing configurations.

of an existing model version, you can update the configurations as needed based on the existing configurations.

Next Step¶

After the model version is staged, it can be deployed online and enter the production status. For more information, see Deploy a Model Version.