Batch Data Processing Overview¶

Data developers can use EnOS Batch Data Processing service to develop workflows for processing batch data. The Batch Data Processing service also provides Script Development, Task Resource Management, and Workflow Operation to help you complete data analysis tasks efficiently.

Key Concepts¶

Workflow¶

A workflow is an automatic data processing flow that consists of tasks, references, and relations. A workflow is a directed acyclic graph (DAG), and it cannot be a circular workflow. A workflow can be scheduled to run only once or periodically.

Task¶

A task is the fundamental element of the workflow where it defines how to process the data. By running a task, the resource associated with the task is run. The Batch Data Processing service provides the following types of tasks:

Data Synchronization task: A data synchronization task will synchronize data from an external data source to the EnOS Hive library. For more information, see Data Synchronization.

SHELL task: A task that runs Shell script.

External APP: Running external applications as task node.

PYTHON task: A task that runs Python script.

Reference¶

A reference is a task or workflow that is the prerequisite of its subsequent tasks. A reference must be the root node of a workflow. A workflow can have more than one reference. Regardless of the scheduling settings of a task, the task is not run until its reference is run.

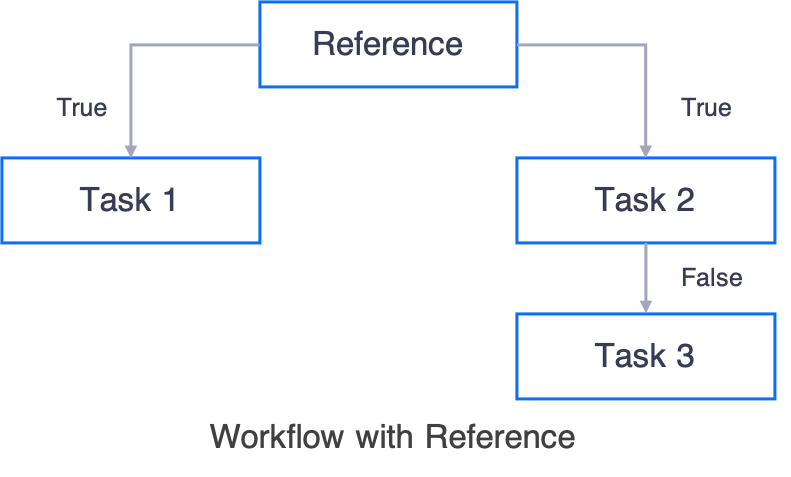

The following figure shows an example workflow with a reference.

Task 1 and Task 2 will not run until the reference is run.

If the workflow is a periodic workflow, all tasks are run at each cycle as defined by the scheduling parameters.

TrueandFalseis only applicable at the monitoring stage when you rerun a task.When

True, the subsequent task is run.When

False, the subsequent task is not run.

Relation¶

An upstream task connects to a downstream task through relations. The relation is uni-directional.

Resource¶

A resource is the script that is run by SHELL and PYTHON tasks. The supported resource formats are: sh, jar, sql, hql, xml, zip, tar, tar.gz, and py.

Stages of Workflow Development¶

The workflow development process has the following stages.

Configuration Stage¶

During configuration, you can create a workflow that has running tasks, and pre-run the workflow to verify whether the workflow runs as designed.

Running Stage¶

At the running stage, the workflow will run according to the scheduling parameters.

Operation Stage¶

At the monitoring stage, you can rerun a single task node or rerun a node and its subsequent nodes to pinpoint issues with the workflow.

Major Functionalities¶

Script Development¶

The Script Development feature supports editing, running, and debugging scripts online, as well as version management of scripts. The developed scripts can be used in PYTHON workflows.

Workflow Designer¶

According to your business requirements, you can design a workflow that has multiple tasks, and each task performs certain actions on your data.

Task Resources¶

You can register your scripts as resources and manage the version of the resources. The resources can then be referenced by tasks in a workflow.

Workflow Operation¶

When a workflow is published, the workflow will be run automatically according to the scheduling parameters or will be run manually when you pre-run it. The Workflow Operation module enables you to view the running status and perform monitoring actions.

Resource Preparation¶

Batch Data Processing - Container

Before configuring Batch Data Processing tasks, ensure that your OU has requested for the Batch Data Processing - Container resource via the EnOS Management Console > Resource Management page. For more information, see Preparing the Container Resource.