Troubleshoot AI Hub¶

This section helps you identify and solve some issues when using AI Hub.

“Need GNU Compiler Collection” error when building a model in AI Hub¶

A need GNU Compiler Collection(GCC) error occurs when you try to build a model in AI Hub.

Cause¶

This issue occurs because the wheel package is missing.

Solution¶

Open the Notebook instance of the model in AI Lab.

Open a Notebook terminal and run the following command to upload the wheel package:

pip install thriftpy

“NO such file or directory:’model’” error when testing an Mlflow-staged model¶

A NO such file or directory:'model' error occurs in the test log when you try to test a model that is staged by Mlflow method.

Cause¶

The value of model parameter in mlflow.{framework}.log_model is not model. Framework refers to the framework you use in Mlflow process.

Solution¶

Open the Notebook instance of the model in AI Lab.

From File Browser, open the model-staging file in JupyterLab.

Check the

mlflow.{framework}.log_modelfunction, and change the value of the model parameter tomodel.

“IsADirectoryError:[ERROR 21] is a directory :’/microservice/model’” error when testing an Mlflow-staged model¶

An IsADirectoryError:[ERROR 21] is a directory :'/microservice/model' error occurs in the test log when you try to test a model that is staged by Mlflow method.

Cause¶

This issue occurs because Mlflow 1.9 or later is used. AI Hub only supports Mlflow 1.8 or older currently.

Solution¶

Use Mlflow 1.8.0 or older to stage the model version. You can install Mlflow 1.8.0 by the following steps:

Go to AI Lab, and open the Notebook instance of the model.

From File Browser, open the model-staging file in JupyterLab.

Run the following command:

conda install Mlflow==1.8.0

Stage and test the model version again after the installation completes.

“ModuleNotFoundError: No module named ‘{framework}’” error when testing an Mlflow-staged model¶

A ModuleNotFoundError: No module named '{framework}' error occurs in the test log when you try to test a model that is staged by Mlflow method. {Framework} refers to the framework you use in Mlflow process.

Possible Causes¶

Cause 1: you import the wrong machine learning framework in model training codes.

Cause 2: you use the wrong invoking method of log_model command.

Solutions¶

Go to AI Lab, and open the Notebook instance of the model.

From File Browser, open the model-staging file in JupyterLab.

Run one of the following commands:

For cause 1: run

import mlflow.{framework}to import the specified framework.For cause 2: run

mlflow.{framework}.log_modelto invoke the specified framework.

Stage and test the model version again.

“AttributeError: ‘str’ object has no attribute ‘decode’” error when testing an Mlflow-staged model¶

An AttributeError: 'str' object has no attribute 'decode' error occurs in the test log when you try to test a model that is staged by Mlflow method.

Cause¶

The issue occurs due to H5py version conflicts. you need to use H5py 2.10.0.

Solution¶

Go to AI Lab, and open the Notebook instance of the model.

From File Browser, open the model-staging file in JupyterLab.

Run the following command:

conda install h5py==2.10.0

Stage and test the model version again after the installation completes.

“File “/microservice/MlflowPredictor.py”, line 30, in predict” error when testing an Mlflow-staged model¶

A File "/microservice/MlflowPredictor.py", line 30, in predict error occurs in the test log when you test a model version that is staged by Mlflow method.

Cause¶

The issue occurs due to model file errors.

Solution¶

Copy the codes on the left code box in the Model Test page.

Go to AI Lab, and open the model file in JupyterLab.

Test the model in the file with the codes you copied.

Fix the issues in your local model files according to the test results. ## “TypeError: an integer is required (got type bytes)” error when testing an Mlflow-staged model

A TypeError: an integer is required (got type bytes) error occurs in the test log when you try to test a model that is staged by Mlflow method. The error looks like this in the test log:

Possible Causes¶

Cause 1: you use tensorflow 1.15 or older to train the model.

Cause 2: the versions of packages in Notebook are different from the versions during mlflow registering process.

Cause 3: the python version in Notebook is different from the version during mlflow registering process.

Solutions¶

For cause 1: update tensorflow framework to 2.3.1.

For cause 2: fix package version conflicts by the following steps:

Go to AI Lab, and open the Notebook instance of the model.

From File Browser, select conda.yaml.

Check and ensure that package versions in conda.yaml are the same as the versions in mlflow registering process.

For cause 3: fix python version conflicts by the following steps:

Go to AI Lab, and open the Notebook instance of the model.

Open a terminal and run

python -command to view the python version in Notebook.Check and ensure that python version in Notebook is the same as the version in mlflow registering process.

Model runs in Notebook, but fails when staging or testing the model version¶

You can run a model successfully in Notebook, but fails to stage or test the model version.

Cause¶

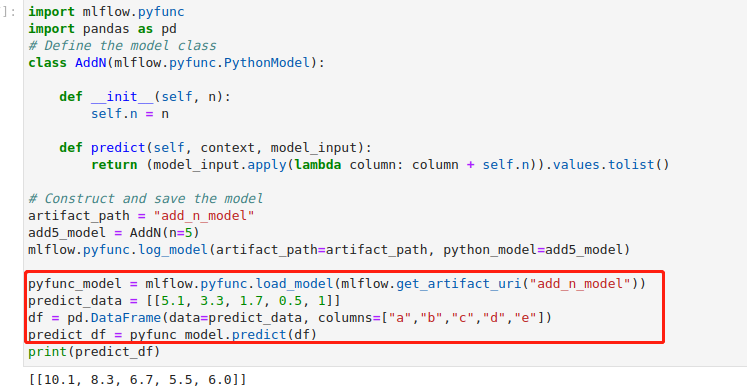

The input parameters of the model are packed as dataframe type. Follow the steps to identify the cause:

Go to AI Lab, and open the Notebook instance of the model.

From File Browser, open the model-staging file in JupyterLab.

Run

mlflow.{framework}.save_modelto save the model files in Notebook.{Framework}refers to the framework you use.Run

mlflow.pyfunc.load_modelto test whether you can load the model.If you can load the model, pack the prediction data into dataframe format by

pd.dataframefunction and runpredictfunction to test the model.

Solutions¶

Solution 1: if you fail to load the model in Step 4, check and fix the issues in local model files.

Solution 2: if the test in Step 5 passes, record the mlflow and python versions and contact your administrator.

“test success, number of test result not match repeat from model” error when staging a model version¶

If the output parameter type of a model is object, a test success, number of test result not match repeat from model error occurs when you stage the model version.

Cause¶

The output results of the model are not in object format.

Solution¶

Double-check the output parameter to ensure the value is in object format. An example of object type looks like this:

[{ "data": [ ["2021-07-31", 5, 6], ["2021-07-31", 5, 6], ["2021-07-31", 5, 6]]}]

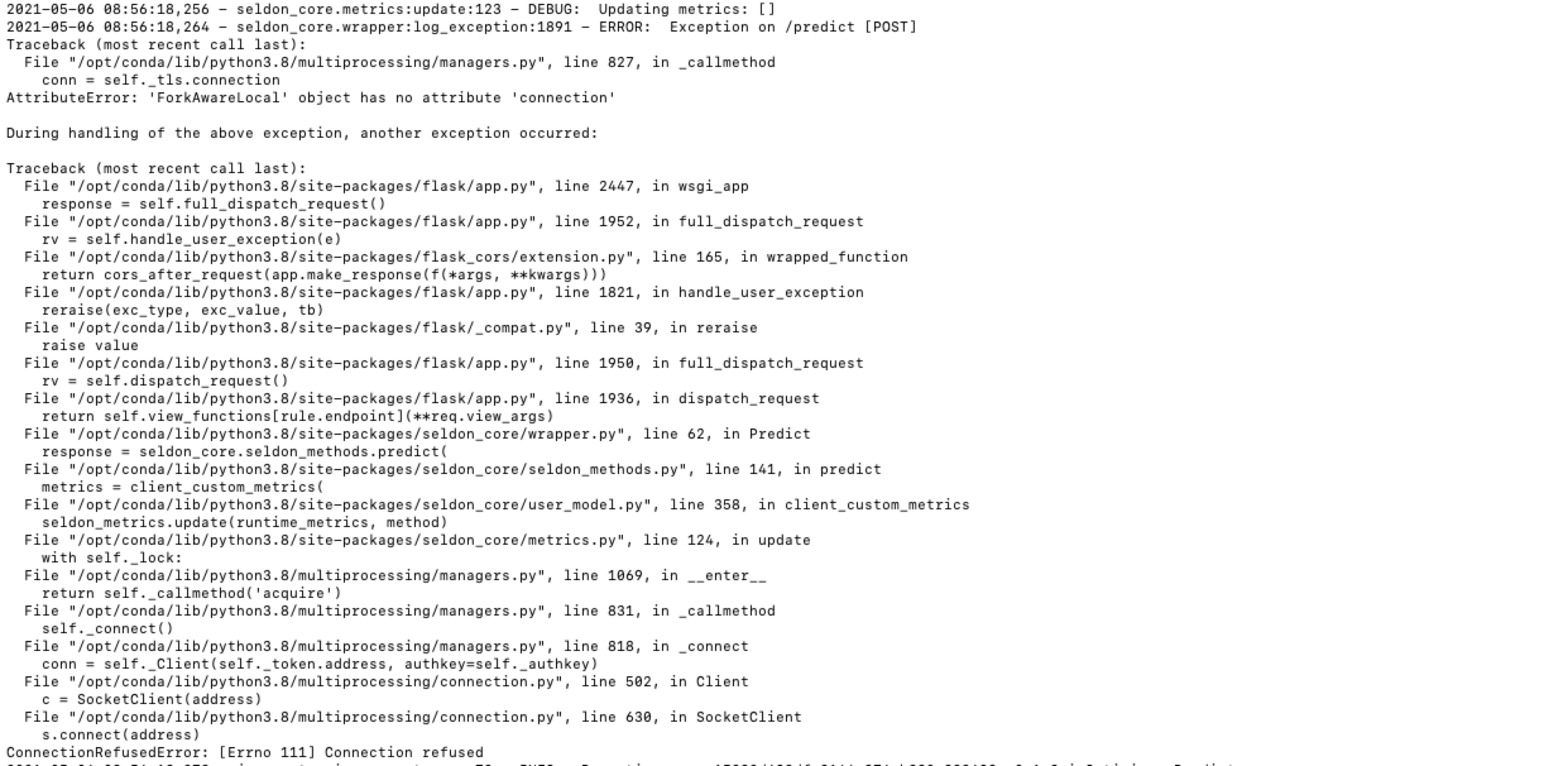

An error occurs in the log file when testing or deploying a model version¶

An error occurs in the log file when you test or deploy a model version:

Cause¶

The error occurs due to the lack of memory.

Solutions¶

Solution 1: if the error occurs when testing the model, add

force_to_stageparameter to the Input Parameter box to ignore the error and stage the model version. For more information on model parameters, see Set Model Parameters.Solution 2: if the error occurs when deploying the model, increase the values of Resource Request and Resource Limit. For more information on model deployment, see Deploy a Model Version.

An error occurs when serializing with Cloudpickle¶

An error occurs when you serialize data with Cloudpickle, for example:

File "/opt/conda/lib/python3.8/site-packages/mlflow/pyfunc/__init__.py", line 522, in load_model

model_impl = importlib.import_module(conf[MAIN])._load_pyfunc(data_path)

File "/opt/conda/lib/python3.8/site-packages/mlflow/pyfunc/model.py", line 223, in _load_pyfunc

python_model = cloudpickle.load(f)

AttributeError: Can't get attribute '_make_function' on <module 'cloudpickle.cloudpickle' from '/opt/conda/lib/python3.8/site-packages/cloudpickle/cloudpickle.py'>

2022-06-07 01:44:27,878 - jaeger_tracing:report_span:73 - INFO: Reporting span 874aec19bfb8dc2f:b5d9f8f14bbebca4:0:1 MlflowPredictor.Predict

2022/06/07 01:44:28 WARNING mlflow.pyfunc: The version of CloudPickle that was used to save the model, `CloudPickle 2.1.0`, differs from the version of CloudPickle that is currently running, `CloudPickle 2.0.0`, and may be incompatible

2022-06-07 01:44:28,388 - seldon_core.wrapper:log_exception:1891 - ERROR: Exception on /predict [POST]

Traceback (most recent call last):

File "/opt/conda/lib/python3.8/site-packages/seldon_core/user_model.py", line 236, in client_predict

Cause¶

The model version in model training is different from the version in model testing.

Solution¶

Specify the same model version when you train and test the model. One of the best practices looks like this:

env = {

'name': 'model',

'channels': ['default'],

'dependencies': [

'python=3.8.8',

{'pip': [

'mlflow==1.13.1',

'sklearn',

'cloudpickle=={}'.format(cloudpickle.__version__),

'eapdataset',

]}

]

}

wind_power_forecast_model = wind_power_forecast(model=fitted_model)

mlflow.pyfunc.log_model(artifact_path="model", python_model=wind_power_forecast_model, conda_env=env)